The Positive Impact of Intelligent Automation in Financial Services

In financial services organizations over the past 20 years, we have witnessed traditional process reengineering vastly increasing performance efficiency of operational processes. Organizations have embraced change, driving straight-through processing of transactions to new levels of performance. But their potential to drive further improvements is limited.

The challenge now faced by management is how to drive levels of automation within these organizations, beyond what would normally be considered operating limits.

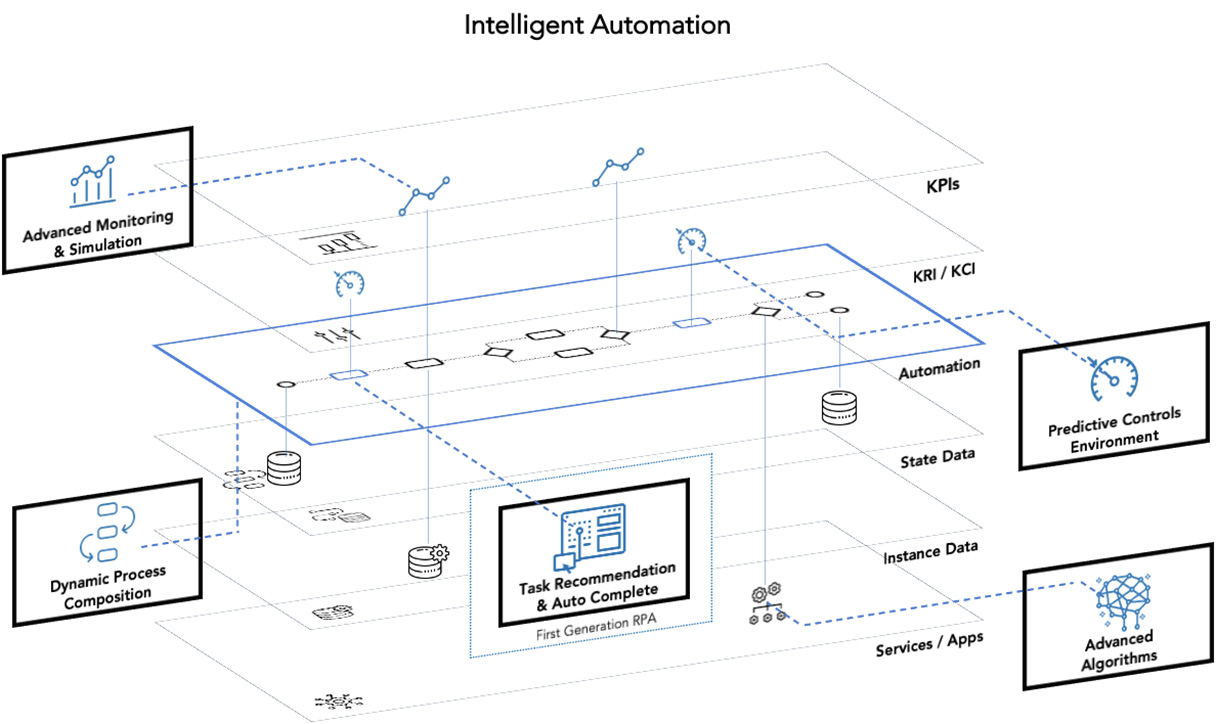

To achieve this goal, we believe that organizations will need to use highly scalable process-centric technologies. These tools must support both automated and manual processes along with intelligent algorithms, using machine and deep learning methods on top of a distributed consensus-based platform. The intersection between process and these methods will allow organizations to move beyond existing constraints to create core processing platforms that deliver true competitive advantage and market agility.

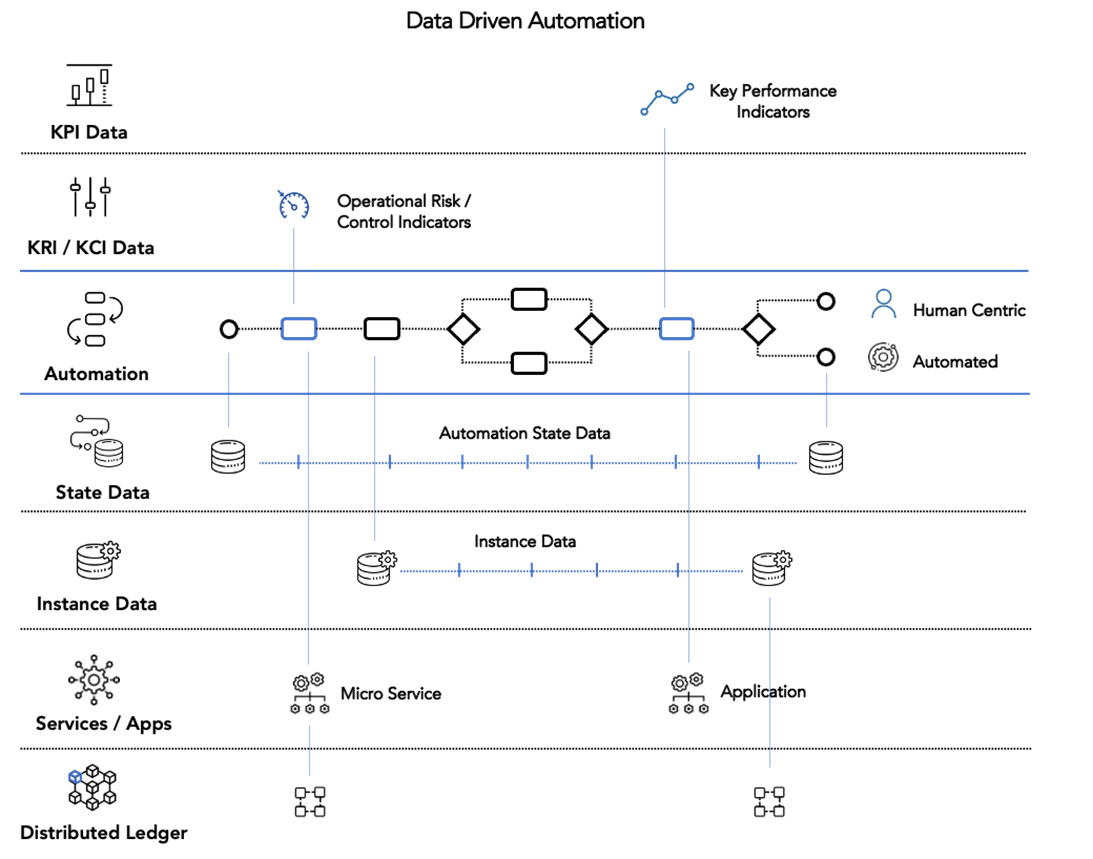

In starting this journey, it’s important to recognize that automation is driven by data, which is a key enabler and provides the basis for incorporating and leveraging advanced algorithms.

Understanding and codifying core operating and transactional processes and associated data is a foundational component of achieving the highest levels of automation. Consider these areas:

Gaining access and being able to leverage process data will create a foundation for driving efficiencies. These processes will use advanced algorithms that can be deployed across the environment to create automations and increase the improvement of operational efficiencies without significant development.

Intelligent automation methods will drive a significant change across a number of aspects of a financial services organization’s operating model.

They provide the capability to drive very high levels of automation, by leveraging:

Financial services organizations are by default digital. Their products for the most part are dematerialized and can be viewed at a high level as an abstraction of data, process and calculations, or algorithms that are triggered by events over time. Although many financial products are highly automated, it is envisioned that additional automation will not only increase efficiency but also create the opportunity for new innovative products and services.

Change is coming and it’s going to become inevitable for financial services organizations to evolve and adapt their processes and operating models: They will need to take advantage of highly automated and autonomous products and services before their competitors or new market entrants do.

Register for Hitachi Financial Services Summit to Hear More from Thomas De Souza

Thomas is a multidisciplinary technologist with an eye for business model innovation and leading-edge data technologies. He has +20 years' experience in financial services with Booz & Co, PwC, a VC-backed FinTech, and global banks including JP Morgan, Deutsche Bank and Citi.